بخشبندی و درجه بندی کارسینوم سلول سنگفرشی دهانی مبتنی بر یادگیری عمیق: تحلیل تطبیقی عملکرد ویژه معماری های مختلف در مجموعه داده های چند بزرگنمایی تقویت شده هیستوپاتولوژیکال

کد: G-1946

نویسندگان: Soussan Irani *, Alireza Fallahi, Hamed Ghadimi ℗

زمان بندی: زمان بندی نشده!

برچسب: پردازش تصاویر پزشکی

دانلود: دانلود پوستر

خلاصه مقاله:

خلاصه مقاله

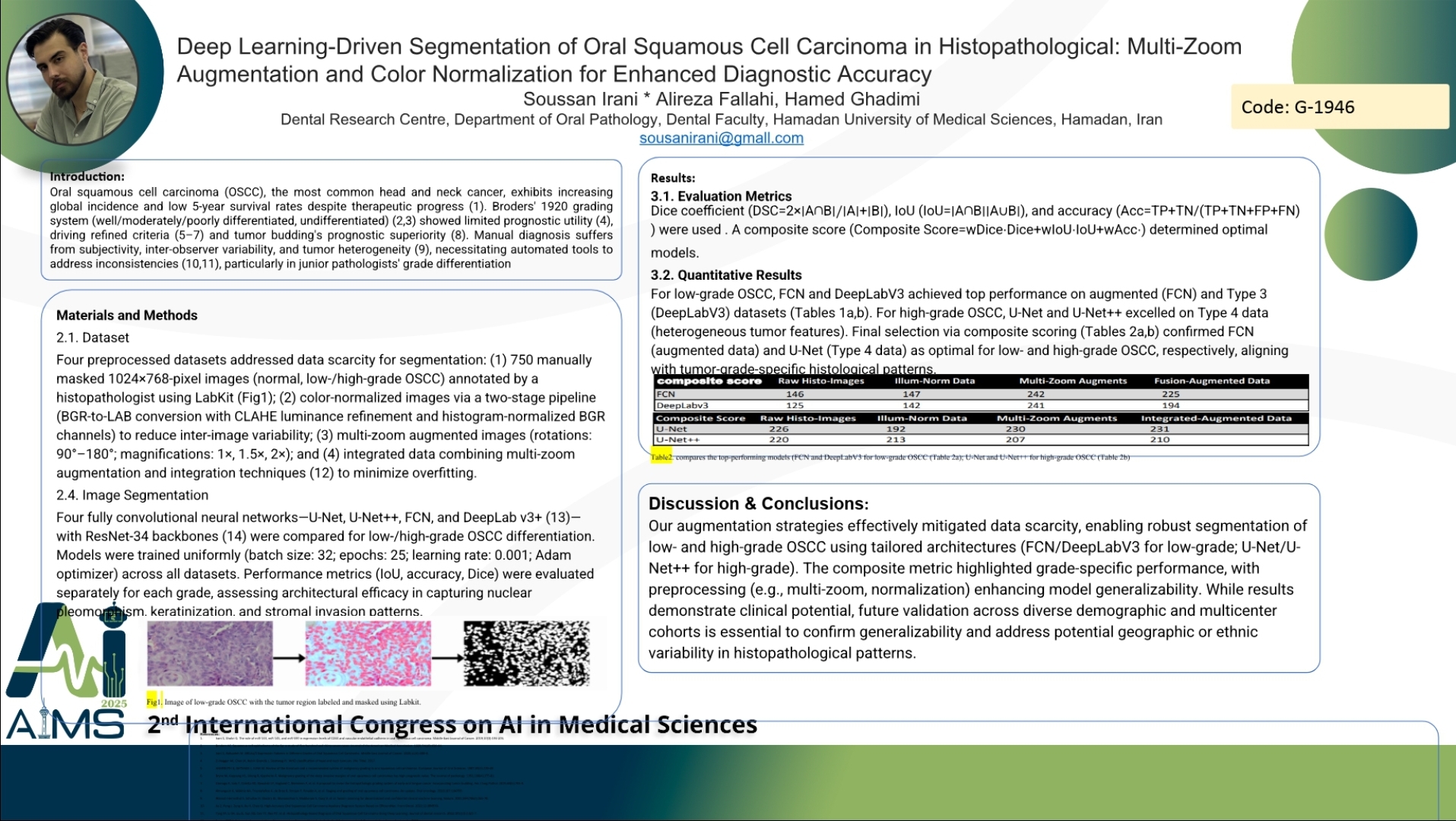

Background and Aims: Oral squamous cell carcinoma (OSCC), the predominant head and neck cancer, faces rising global incidence with persistently low 5-year survival rates. Traditional histopathological grading, based on differentiation levels, lacks prognostic reliability and suffers from inter-observer variability, particularly in distinguishing low- versus high-grade OSCC. Junior pathologists often struggle with diagnostic accuracy, underscoring the need for automated tools to enhance precision. This study aims to develop a deep learning-based framework for automated OSCC segmentation and grading, addressing challenges in tumor heterogeneity and data scarcity while improving diagnostic consistency. Methods: Four datasets were curated: (1) 750 manually masked tumor images (normal, low/high-grade); (2) color-normalized images via LAB-space transformation and histogram adjustments; (3) multi-zoom augmented data (rotations, magnifications); and (4) integrated augmented-normalized images. Four architectures—U-Net, U-Net++, FCN, and DeepLabV3 (ResNet-34 backbone)—were trained uniformly (25 epochs, batch size 32, Adam optimizer) and evaluated using Dice, IoU, and accuracy. A composite score identified optimal models for each grade. Composite Score=(wDice⋅Dice) + (wIoU⋅IoU) + (wAcc⋅Accuracy) Results: For low-grade OSCC, FCN and DeepLabV3 excelled, achieving top composite scores (FCN: 242 and DeepLabV3: 241 on augmented data). U-Net and U-Net++ outperformed others in high-grade OSCC segmentation, with U-Net scoring highest (231) on Type 4 data Normalization stabilized feature extraction, whereas multi-random zoom augmentation significantly boosted model generalizability across heterogeneous tumor patterns. FCN’s simplicity suited low-grade patterns, whereas U-Net’s captured high-grade complexity. Conclusion: Tailoring deep learning architectures to OSCC grades improves segmentation accuracy. FCN and DeepLabV3 optimize low-grade detection, while U-Net variants excel in high-grade scenarios. Combining preprocessing strategies mitigates data limitations, offering a scalable tool to reduce diagnostic subjectivity. This approach promises to augment pathological workflows, particularly in resource-constrained settings.

کلمات کلیدی

Oral squamous cell carcinoma, deep learning