اعتبارسنجی و اثربخشی سؤالات چندگزینهای مبتنی بر مورد تولیدشده توسط GPT-4o در آموزش داروسازی: یک مطالعه مقایسهای

کد: G-1935

نویسندگان: Amirhossein Rastineh ℗, Mandana Izadpanah *, Saman Sameri, Kaveh Eslami

زمان بندی: زمان بندی نشده!

برچسب: دستیار مجازی هوشمند

دانلود: دانلود پوستر

خلاصه مقاله:

خلاصه مقاله

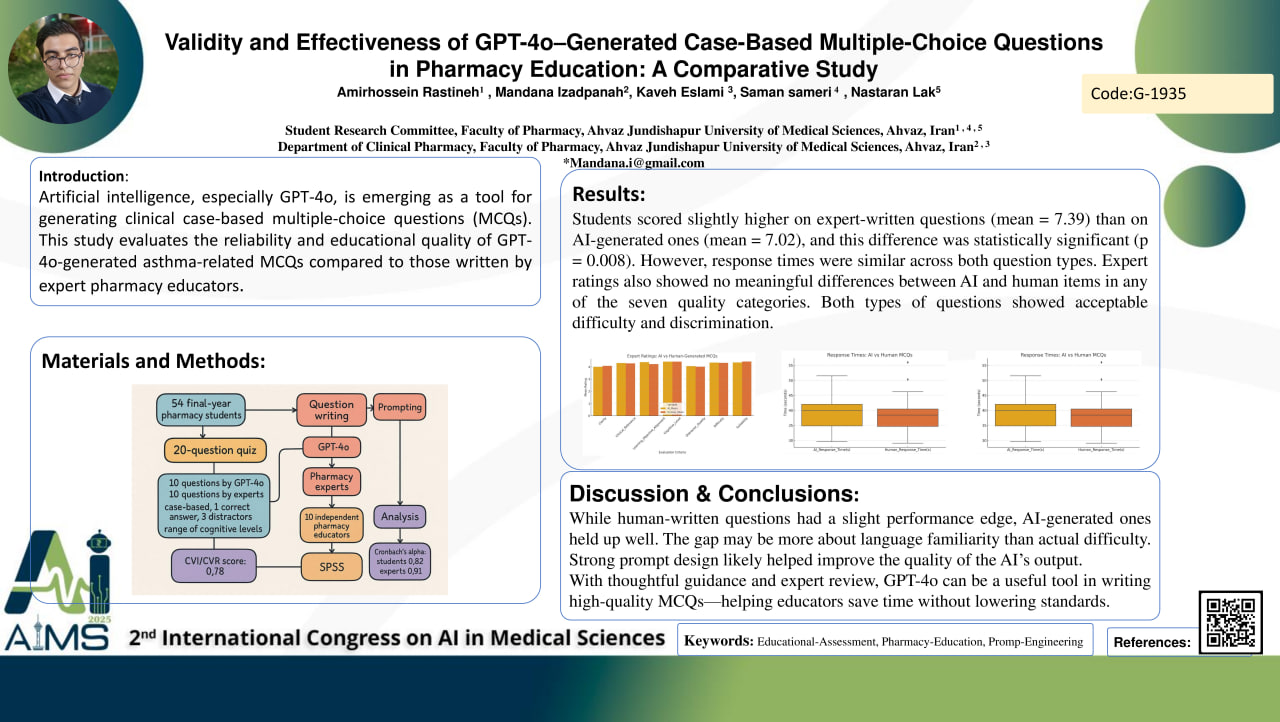

Background: Artificial intelligence (AI) is becoming an influential part of modern education, especially in how we design and deliver assessments. In pharmacy programs, MCQs play a key role in checking students’ clinical decision-making skills. With its advanced language capabilities, GPT-4o offers a new way to support the creation of meaningful, case-based questions. This study looked into how reliable and effective GPT-4o is at generating MCQs about asthma—a core topic in pharmacy education—and how these compare to questions written by experienced educators. Methods: Fifty-four final-year pharmacy students took a 20-question quiz that included 10 questions written by GPT-4o and 10 written by pharmacy experts. All questions were case-based, had one correct answer and three distractors, and were designed to cover a range of cognitive levels based on Bloom’s Taxonomy. Prompts were carefully written to guide both AI and human question writers toward balanced difficulty and strong educational value. Ten independent pharmacy educators reviewed all items for content validity using the CVI/CVR method (score: 0.78). Reliability was measured with Cronbach’s alpha (0.82 for student answers, 0.91 for expert reviews). SPSS was used for statistical analysis. Results: Students scored slightly higher on expert-written questions (mean = 7.39) than on AI-generated ones (mean = 7.02), and this difference was statistically significant (p = 0.008). However, response times were similar across both question types. Expert ratings also showed no meaningful differences between AI and human items in any of the seven quality categories. Both types of questions showed acceptable difficulty and discrimination. Discussion: While human-written questions had a slight performance edge, AI-generated ones held up well. The gap may be more about language familiarity than actual difficulty. Strong prompt design likely helped improve the quality of the AI’s output. Conclusion: With thoughtful guidance and expert review, GPT-4o can be a useful tool in writing high-quality MCQs—helping educators save time without lowering standards.

کلمات کلیدی

Educational-Assessment, Pharmacy-Education, Promp-Engineering