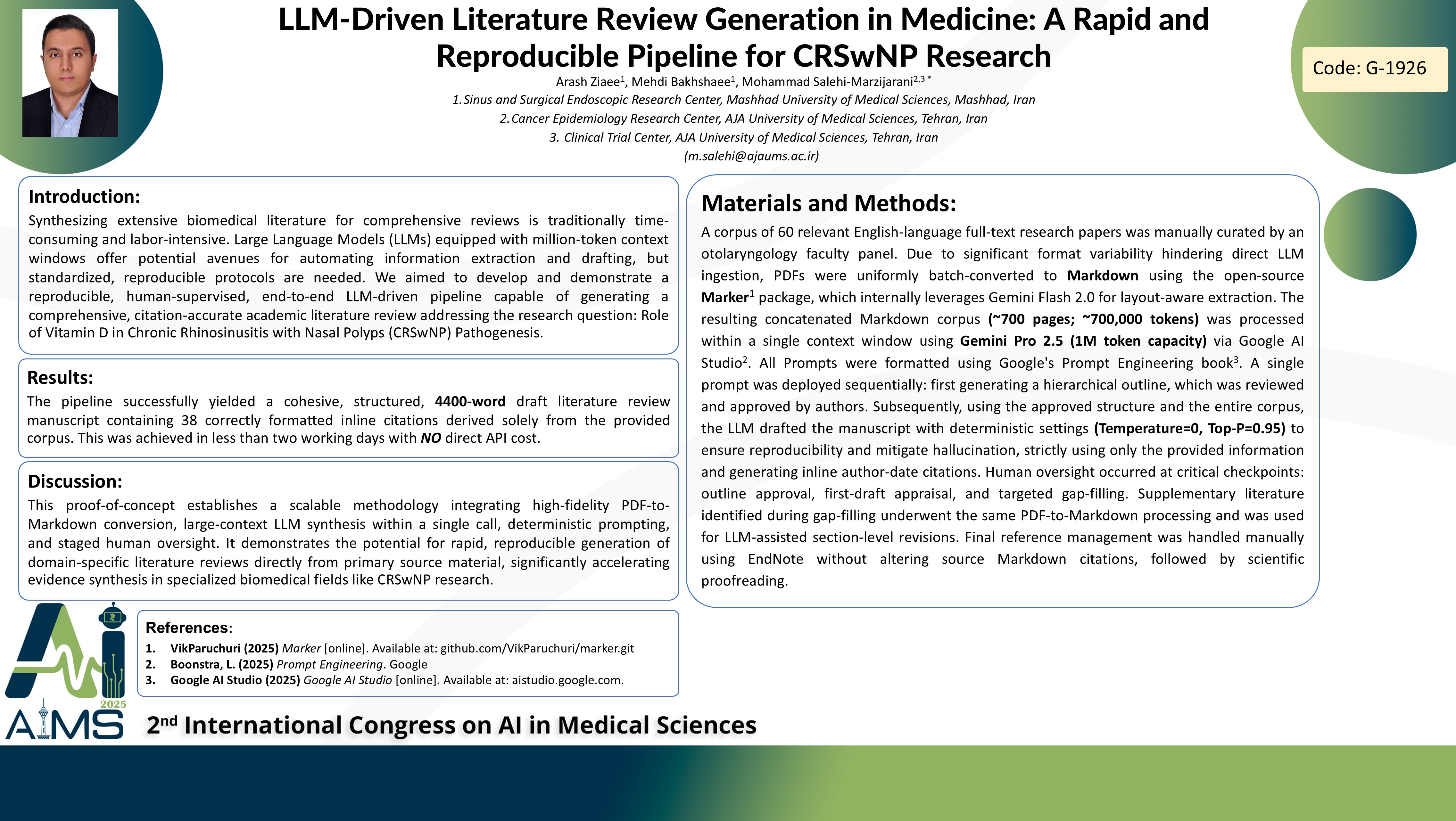

LLM-Driven Literature Review Generation in Medicine: A Rapid and Reproducible Pipeline for CRSwNP Research

Code: G-1926

Authors: Arash Ziaee ℗, Mehdi Bakhshaee, Mohammad Salehi-Marzijarani *

Schedule: Not Scheduled!

Tag: Intelligent Virtual Assistant

Download: Download Poster

Abstract:

Abstract

Background and aims: Synthesizing extensive biomedical literature for comprehensive reviews is traditionally time-consuming and labor-intensive. Large Language Models (LLMs) equipped with million-token context windows offer potential avenues for automating information extraction and drafting, but standardized, reproducible protocols are needed. We aimed to develop and demonstrate a reproducible, human-supervised, end-to-end LLM-driven pipeline capable of generating a comprehensive, citation-accurate academic literature review addressing the research question: Role of Vitamin D in Chronic Rhinosinusitis with Nasal Polyps (CRSwNP) Pathogenesis. Method: A corpus of 60 relevant English-language full-text research papers was manually curated by an otolaryngology faculty panel. Due to significant format variability hindering direct LLM ingestion, PDFs were uniformly batch-converted to Markdown using the open-source Marker package, which internally leverages Gemini Flash 2.0 for layout-aware extraction. The resulting concatenated Markdown corpus (~700 pages; ~700,000 tokens) was processed within a single context window using Gemini Pro 2.5 (1M token capacity) via Google AI Studio. All Prompts were formatted using Google's Prompt Engineering book. A single prompt was deployed sequentially: first generating a hierarchical outline, which was reviewed and approved by authors. Subsequently, using the approved structure and the corpus, the LLM drafted the manuscript with deterministic settings (Temperature=0, Top-P=0.95) to ensure reproducibility and mitigate hallucination, strictly using only the provided information and generating inline author-date citations. Human oversight occurred at critical checkpoints: outline approval, first-draft appraisal, and targeted gap-filling. Supplementary literature identified during gap-filling underwent the same PDF-to-Markdown processing and was used for LLM-assisted section-level revisions. Final reference management was handled manually using EndNote, followed by scientific proofreading. Results: The pipeline successfully yielded a cohesive, structured, 4400-word draft literature review manuscript containing 38 correctly formatted inline citations derived solely from the provided corpus. This was achieved in less than two working days with no direct API cost. Conclusion: This proof-of-concept establishes a scalable methodology integrating high-fidelity PDF-to-Markdown conversion, large-context LLM synthesis within a single call, deterministic prompting, and staged human oversight. It demonstrates the potential for rapid, reproducible generation of domain-specific literature reviews directly from primary source material, significantly accelerating evidence synthesis in specialized biomedical fields like CRSwNP research.

Keywords

LLM,Evidence Synthesis,Automated Literature Review,Human Oversight