Comparative Performance Analysis of Large Language Models: ChatGPT, Google Gemini, and DeepSeek in Approach to Resistant Hypertension

Code: G-1890

Authors: Fatemeh Zahra Seyed-Kolbadi ℗, Sara Ghazizadeh, Ali Khorram, Ghazal Rezaee, Fatemeh Ardali, Shahin Abbaszadeh *

Schedule: Not Scheduled!

Tag: Clinical Decision Support System

Download: Download Poster

Abstract:

Abstract

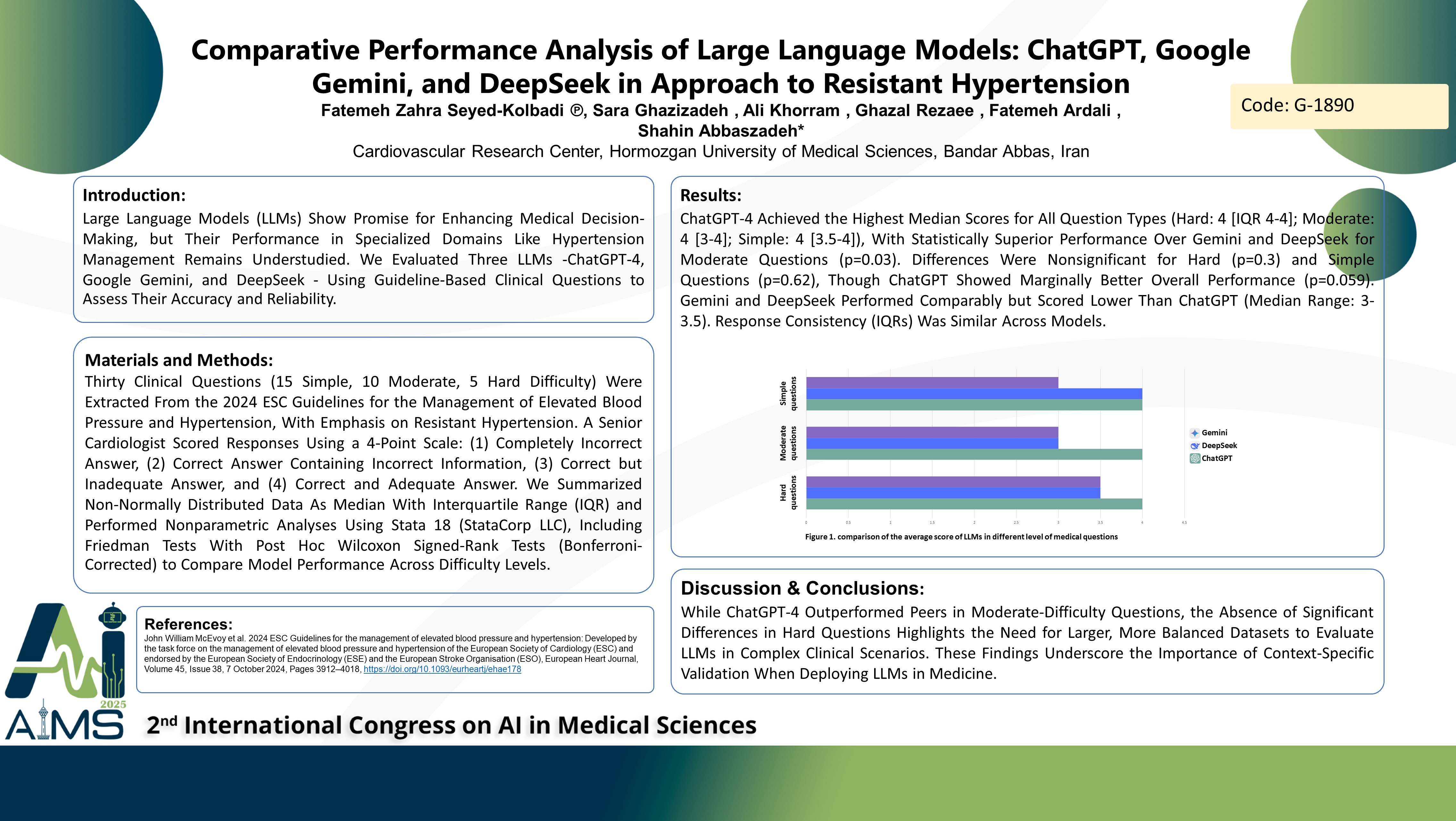

Background: Large Language Models (LLMs) Show Promise for Enhancing Medical Decision-Making, but Their Performance in Specialized Domains Like Hypertension Management Remains Understudied. We Evaluated Three LLMs - ChatGPT-4, Google Gemini, and DeepSeek - Using Guideline-Based Clinical Questions to Assess Their Accuracy and Reliability. Methods: Thirty Clinical Questions (15 Simple, 10 Moderate, 5 Hard Difficulty) Were Extracted From the 2024 ESC Guidelines for the Management of Elevated Blood Pressure and Hypertension, With Emphasis on Resistant Hypertension. A Senior Cardiologist Scored Responses Using a 4-Point Scale: (1) Completely Incorrect Answer, (2) Correct Answer Containing Incorrect Information, (3) Correct but Inadequate Answer, and (4) Correct and Adequate Answer. We Summarized Non-Normally Distributed Data As Median With Interquartile Range (IQR) and Performed Nonparametric Analyses Using Stata 18 (StataCorp LLC), Including Friedman Tests With Post Hoc Wilcoxon Signed-Rank Tests (Bonferroni-Corrected) to Compare Model Performance Across Difficulty Levels. Results: ChatGPT-4 Achieved the Highest Median Scores for All Question Types (Hard: 4 [IQR 4-4]; Moderate: 4 [3-4]; Simple: 4 [3.5-4]), With Statistically Superior Performance Over Gemini and DeepSeek for Moderate Questions (p=0.03). Differences Were Nonsignificant for Hard (p=0.3) and Simple Questions (p=0.62), Though ChatGPT Showed Marginally Better Overall Performance (p=0.059). Gemini and DeepSeek Performed Comparably but Scored Lower Than ChatGPT (Median Range: 3-3.5). Response Consistency (IQRs) Was Similar Across Models. Conclusion: While ChatGPT-4 Outperformed Peers in Moderate-Difficulty Questions, the Absence of Significant Differences in Hard Questions - Coupled With the Small Sample of Hard Queries - Highlights the Need for Larger, More Balanced Datasets to Robustly Evaluate LLMs in Complex Clinical Scenarios. These Findings Underscore the Importance of Context-Specific Validation When Deploying LLMs in Medicine.

Keywords

LLMs, Hypertension, AI Validation