Evaluating the Performance of Artificial Intelligence Chatbots in Medical Examinations: A Scoping Review

Code: G-1784

Authors: Meisam Dastani ℗, Mahnaz Mohseni *

Schedule: Not Scheduled!

Tag: Intelligent Virtual Assistant

Download: Download Poster

Abstract:

Abstract

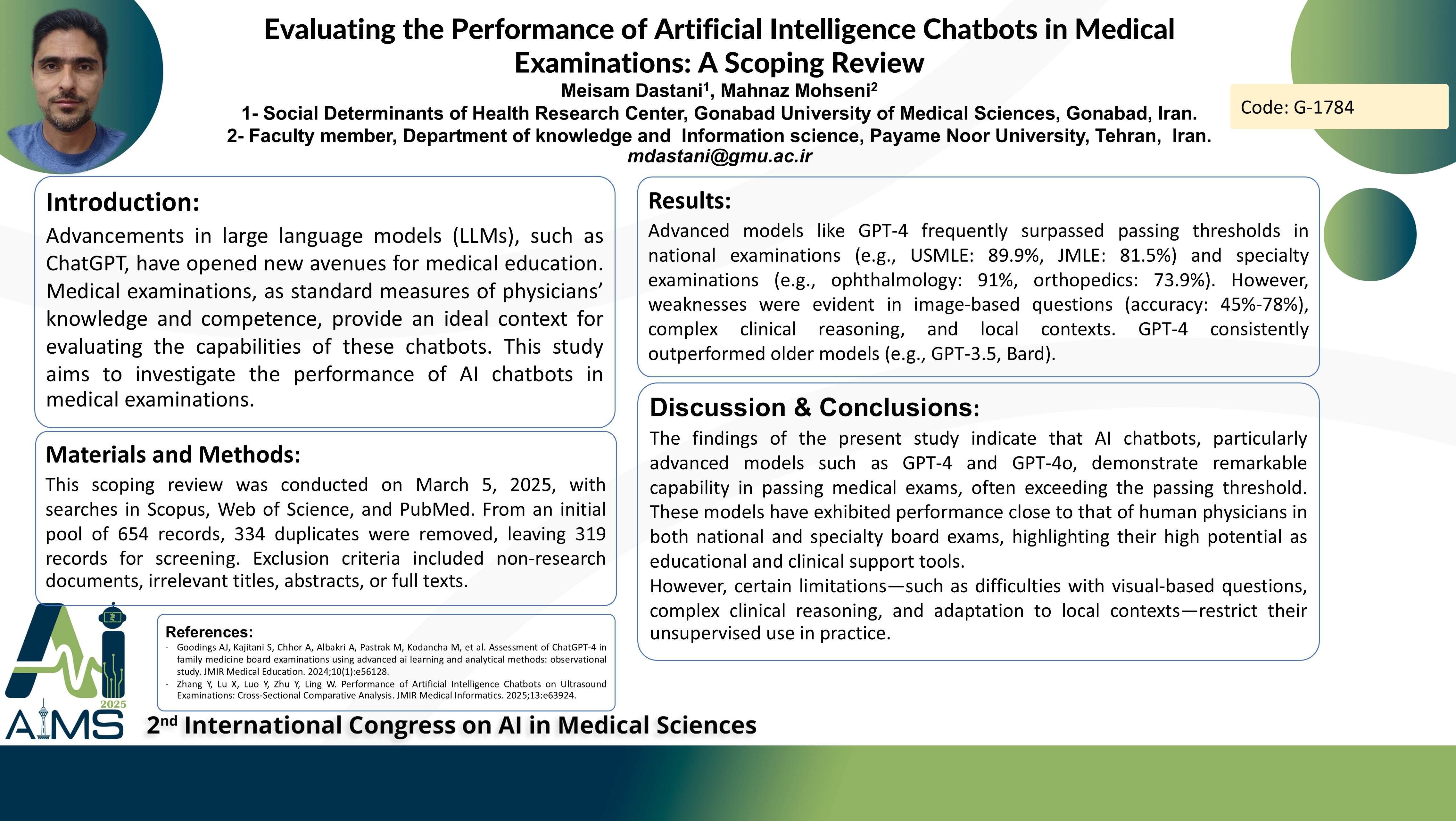

Introduction: Advancements in large language models (LLMs), such as ChatGPT, have opened new avenues for medical education. Medical examinations, as standard measures of physicians’ knowledge and competence, provide an ideal context for evaluating the capabilities of these chatbots. This study aims to investigate the performance of AI chatbots in medical examinations. Methods: This scoping review was conducted on March 5, 2025, with searches in Scopus (263 records), Web of Science (196 records), and PubMed (195 records). From an initial pool of 654 records, 334 duplicates were removed, leaving 319 records for screening. Exclusion criteria included non-research documents, irrelevant titles, abstracts, or full texts. Data from eligible studies were extracted and descriptively analyzed, categorized into national, specialty, and preclinical examinations. Findings: Advanced models like GPT-4 frequently surpassed passing thresholds in national examinations (e.g., USMLE: 89.9%, JMLE: 81.5%) and specialty examinations (e.g., ophthalmology: 91%, orthopedics: 73.9%). However, weaknesses were evident in image-based questions (accuracy: 45%-78%), complex clinical reasoning, and local contexts. GPT-4 consistently outperformed older models (e.g., GPT-3.5, Bard). Conclusion: AI chatbots demonstrate significant potential as educational tools in medicine, but limitations in multimodal processing and cultural adaptation persist. Future research should focus on improving these aspects and integrating chatbots into medical education frameworks.

Keywords

AI Chatbots, LLMS, Medical Examinations, ChatGPT