AI Guidelines in Healthcare: A Systematic Review of Reporting Frameworks, Quality Assessment, Clinical Implementation, Ethical Governance, and Technical Standards (2015-2024)

Code: G-1768

Authors: Najibeh Mohseni MoallemKolaei ℗, Parisa Yousefi Konjdar , Leila Shokrizade Arani *

Schedule: Not Scheduled!

Tag: Health Policy, Law & Management in AI

Download: Download Poster

Abstract:

Abstract

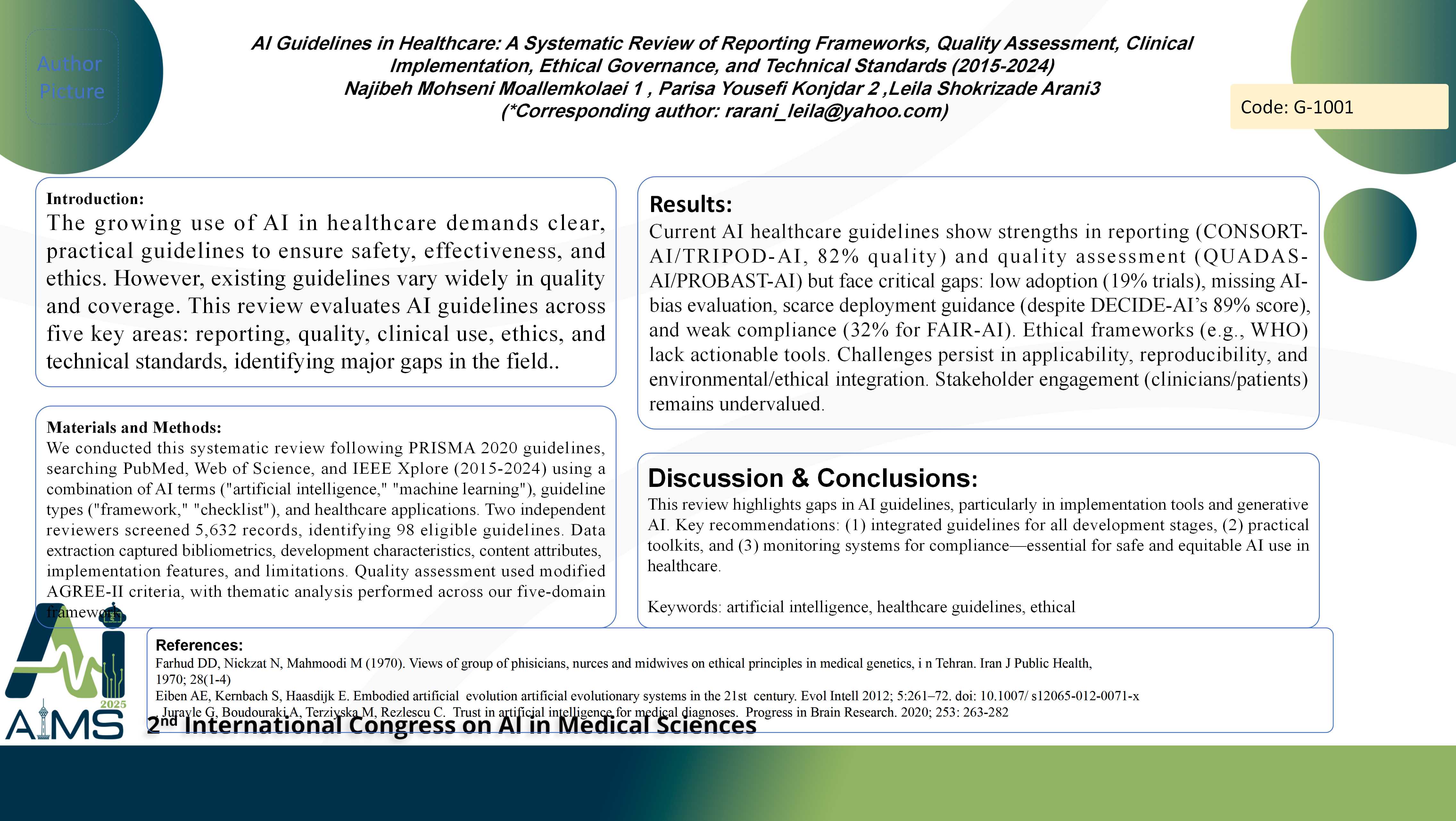

Background and aims: The growing use of AI in healthcare demands clear, practical guidelines to ensure safety, effectiveness, and ethics. However, existing guidelines vary widely in quality and coverage. This review evaluates AI guidelines across five key areas: reporting, quality, clinical use, ethics, and technical standards, identifying major gaps in the field. Method: We conducted this systematic review following PRISMA 2020 guidelines, searching PubMed, Web of Science, and IEEE Xplore (2015-2024) using a combination of AI terms ("artificial intelligence," "machine learning"), guideline types ("framework," "checklist"), and healthcare applications. Two independent reviewers screened 5,632 records, identifying 98 eligible guidelines. Data extraction captured bibliometrics, development characteristics, content attributes, implementation features, and limitations. Quality assessment used modified AGREE-II criteria, with thematic analysis performed across our five-domain framework. Results: Current AI healthcare guidelines show strengths in reporting (CONSORT-AI/TRIPOD-AI, 82% quality) and quality assessment (QUADAS-AI/PROBAST-AI) but face critical gaps: low adoption (19% trials), missing AI-bias evaluation, scarce deployment guidance (despite DECIDE-AI’s 89% score), and weak compliance (32% for FAIR-AI). Ethical frameworks (e.g., WHO) lack actionable tools. Challenges persist in applicability, reproducibility, and environmental/ethical integration. Stakeholder engagement (clinicians/patients) remains undervalued. Conclusion: This review highlights gaps in AI guidelines, particularly in implementation tools and generative AI. Key recommendations: (1) integrated guidelines for all development stages, (2) practical toolkits, and (3) monitoring systems for compliance—essential for safe and equitable AI use in healthcare.

Keywords

Artificial Intelligence, Healthcare Guidelines, Ethical