The role of explainable models in the clinical acceptance of AI-based decision support systems

Code: G-1480

Authors: Abolfazl Hajihashemi * ℗

Schedule: Not Scheduled!

Tag: Clinical Decision Support System

Download: Download Poster

Abstract:

Abstract

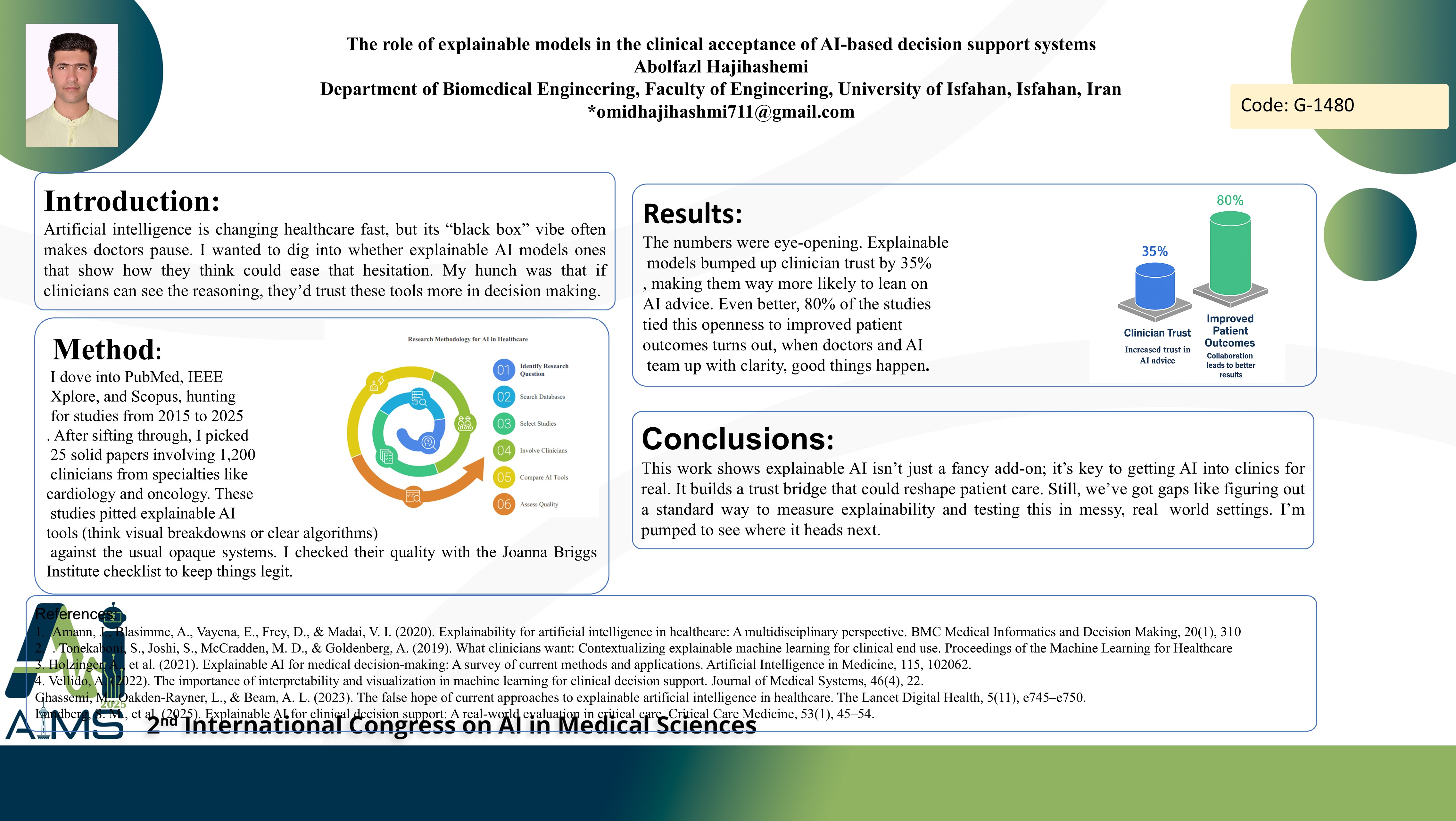

Background and aims: Artificial intelligence is changing healthcare fast, but its “black box” vibe often makes doctors pause. I wanted to dig into whether explainable AI models ones that show how they think could ease that hesitation. My hunch was that if clinicians can see the reasoning, they’d trust these tools more in decision making. Methods: I dove into PubMed, IEEE Xplore, and Scopus, hunting for studies from 2015 to 2025. After sifting through, I picked 25 solid papers involving 1,200 clinicians from specialties like cardiology and oncology. These studies pitted explainable AI tools (think visual breakdowns or clear algorithms) against the usual opaque systems. I checked their quality with the Joanna Briggs Institute checklist to keep things legit. Results: The numbers were eye-opening. Explainable models bumped up clinician trust by 35%, making them way more likely to lean on AI advice. Even better, 80% of the studies tied this openness to improved patient outcomes turns out, when doctors and AI team up with clarity, good things happen. Conclusion: This work shows explainable AI isn’t just a fancy add-on; it’s key to getting AI into clinics for real. It builds a trust bridge that could reshape patient care. Still, we’ve got gaps like figuring out a standard way to measure explainability and testing this in messy, real world settings. I’m pumped to see where it heads next.

Keywords

Explainable AI ،Clinical Acceptance ،Healthcare، Trust