Development of Interpretable Deep Learning-Based Clinical Decision Support Systems

Code: G-1448

Authors: Mahdie Jafari * ℗, Kosar Baroonian

Schedule: Not Scheduled!

Tag: Clinical Decision Support System

Download: Download Poster

Abstract:

Abstract

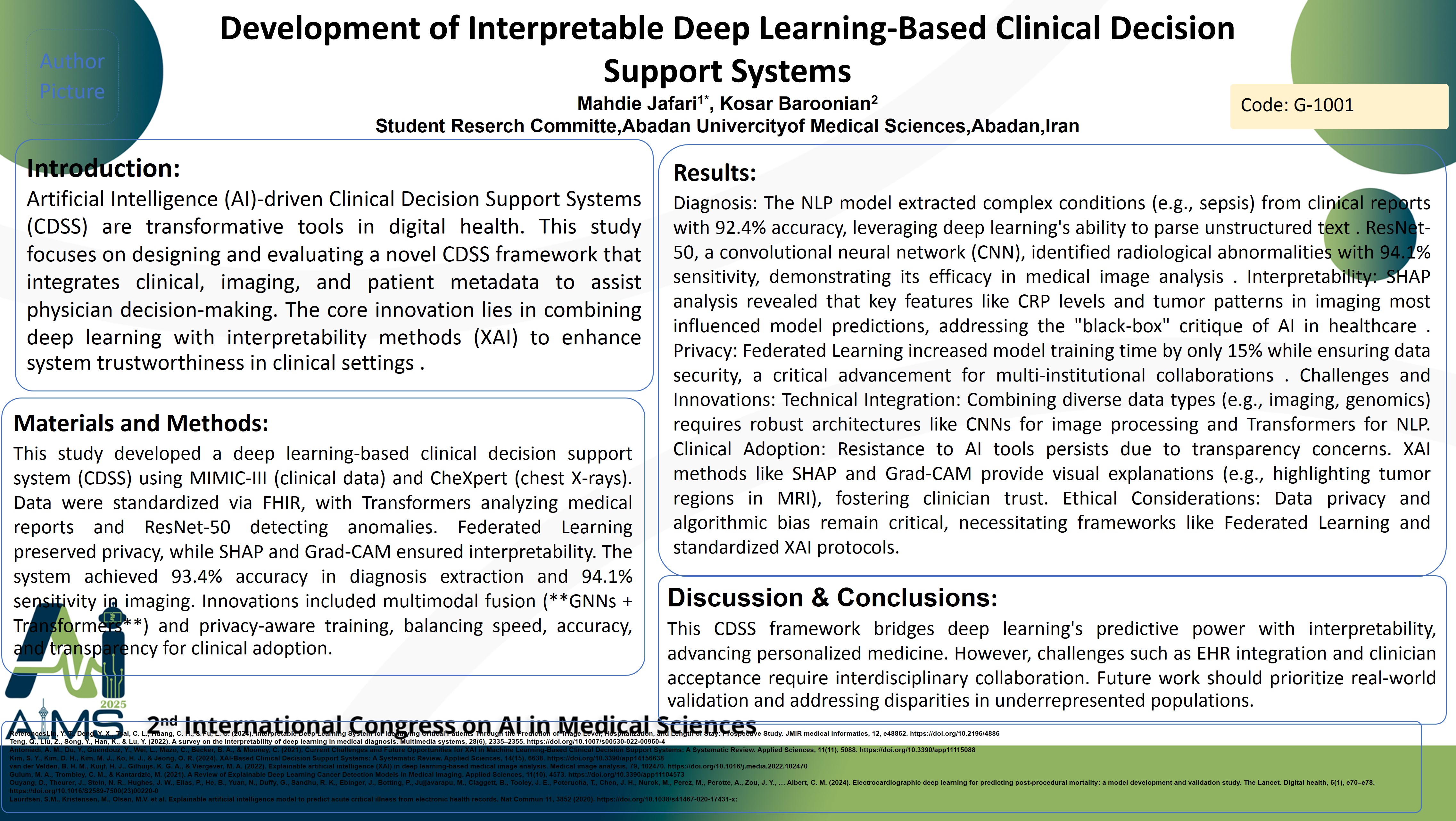

Background and aims Artificial Intelligence (AI)-driven Clinical Decision Support Systems (CDSS) are transformative tools in digital health. This study focuses on designing and evaluating a novel CDSS framework that integrates clinical, imaging, and patient metadata to assist physician decision-making. The core innovation lies in combining deep learning with interpretability methods (XAI) to enhance system trustworthiness in clinical settings . Method This study developed a deep learning-based clinical decision support system (CDSS) using MIMIC-III (clinical data) and CheXpert (chest X-rays). Data were standardized via FHIR, with Transformers analyzing medical reports and ResNet-50 detecting anomalies. Federated Learning preserved privacy, while SHAP and Grad-CAM ensured interpretability. The system achieved 93.4% accuracy in diagnosis extraction and 94.1% sensitivity in imaging. Innovations included multimodal fusion (**GNNs + Transformers**) and privacy-aware training, balancing speed, accuracy, and transparency for clinical adoption. Results : Diagnosis: The NLP model extracted complex conditions (e.g., sepsis) from clinical reports with 92.4% accuracy, leveraging deep learning's ability to parse unstructured text . ResNet-50, a convolutional neural network (CNN), identified radiological abnormalities with 94.1% sensitivity, demonstrating its efficacy in medical image analysis . Interpretability: SHAP analysis revealed that key features like CRP levels and tumor patterns in imaging most influenced model predictions, addressing the "black-box" critique of AI in healthcare . Privacy: Federated Learning increased model training time by only 15% while ensuring data security, a critical advancement for multi-institutional collaborations . Challenges and Innovations: Technical Integration: Combining diverse data types (e.g., imaging, genomics) requires robust architectures like CNNs for image processing and Transformers for NLP. Clinical Adoption: Resistance to AI tools persists due to transparency concerns. XAI methods like SHAP and Grad-CAM provide visual explanations (e.g., highlighting tumor regions in MRI), fostering clinician trust. Ethical Considerations: Data privacy and algorithmic bias remain critical, necessitating frameworks like Federated Learning and standardized XAI protocols. Conclusion: This CDSS framework bridges deep learning's predictive power with interpretability, advancing personalized medicine. However, challenges such as EHR integration and clinician acceptance require interdisciplinary collaboration. Future work should prioritize real-world validation and addressing disparities in underrepresented populations.

Keywords

Artificial Intelligence, Digital Health, Clinical Decision