Integrating Explainable AI into Deep Learning Models for MRI-Based Alzheimer’s Disease Diagnosis: A Review

Code: G-1036

Authors: Laleh Rahmanian * ℗

Schedule: Not Scheduled!

Tag: Biomedical Signal Processing

Download: Download Poster

Abstract:

Abstract

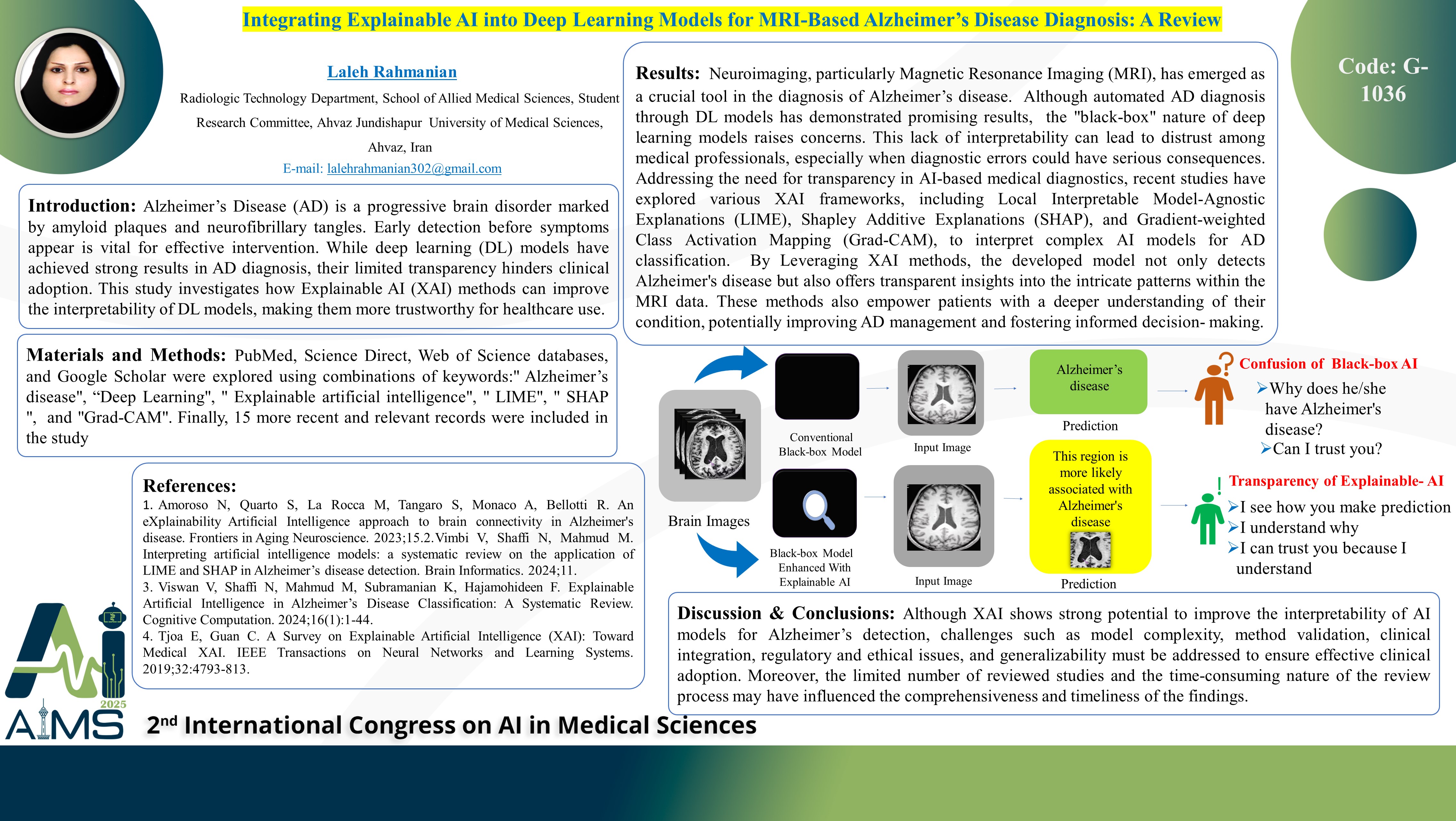

Background and aims: Alzheimer’s Disease (AD) is a progressive neurodegenerative disorder characterized by the accumulation of amyloid plaques and neurofibrillary tangles in the brain. Detecting AD in its preliminary stages before clinical manifestations is crucial for timely treatment. Although deep learning (DL) models have shown promising results in diagnosing Alzheimer’s disease (AD), their lack of transparency and interpretability presents significant barriers to their adoption by healthcare professionals. This study aims to address this challenge by exploring the application of Explainable Artificial Intelligence (XAI) techniques to enhance the interpretability of DL models for AD diagnosis. Method: PubMed, Science Direct, Web of Science databases, and Google Scholar were explored using combinations of keywords:" Alzheimer’s disease", “Deep Learning", " Explainable artificial intelligence", " LIME", " SHAP ", and "Grad-CAM". Finally, 15 more recent and relevant records were included in the study. Results: Neuroimaging, particularly Magnetic Resonance Imaging (MRI), has emerged as a crucial tool in the diagnosis of Alzheimer’s disease. Although automated AD diagnosis through DL models has demonstrated promising results, the "black-box" nature of deep learning models raises concerns. This lack of interpretability can lead to distrust among medical professionals, especially when diagnostic errors could have serious consequences. Addressing the need for transparency in AI-based medical diagnostics, recent studies have explored various XAI frameworks, including Local Interpretable Model‑Agnostic Explanations (LIME), Shapley Additive Explanations (SHAP), and Gradient-weighted Class Activation Mapping (Grad-CAM), to interpret complex AI models for AD classification. By Leveraging XAI methods, the developed model not only detects Alzheimer's disease but also offers transparent insights into the intricate patterns within the MRI data. These methods also empower patients with a deeper understanding of their condition, potentially improving AD management and fostering informed decision- making. Conclusion: While XAI holds great promise for improving the interpretability of AI models in Alzheimer's detection, several challenges such as interpretability of complex models, validation of XAI methods, integration in to clinical workflow, regulatory and ethical consideration, and generalizability, need to be addressed to enhance its effectiveness and acceptance in clinical practice.

Keywords

Alzheimer’s disease, Deep Learning, Explainable AI